Langtrace

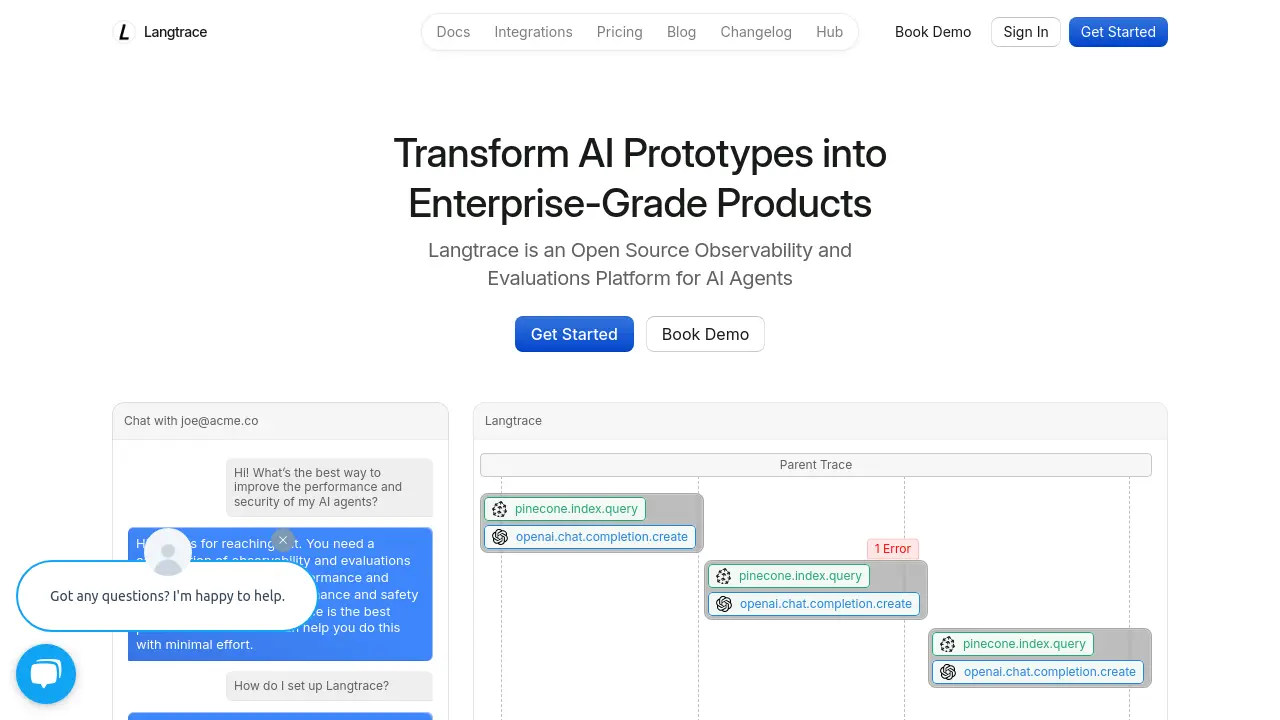

Open Source Observability and Evaluations Platform for AI Agents

Description

Langtrace is an open-source observability and evaluations platform designed for AI agents. It empowers developers to transform AI prototypes into robust, enterprise-grade applications by providing comprehensive tools to monitor and evaluate AI agent performance. The platform focuses on critical aspects such as accuracy, token usage, operational costs, and inference latency.

Featuring a straightforward, non-intrusive setup that requires just two lines of code, Langtrace offers support for both Python and TypeScript. It seamlessly integrates with popular AI frameworks like CrewAI, DSPy, LlamaIndex, and Langchain, alongside a diverse range of LLM providers and VectorDBs. Key functionalities include detailed API request tracing, prompt version control, and a playground for comparing prompt performance across various models, all underpinned by enterprise-grade security and SOC2 Type II compliance.

Key Features

- Open Source Platform: Fully accessible source code on GitHub for customization, audit, and contribution.

- Observability & Tracing: Track vital metrics like token usage, cost, latency, and accuracy with automated GenAI stack tracing and dashboards.

- AI Evaluations: Measure baseline performance and curate datasets for automated evaluations and fine-tuning.

- Prompt Version Control: Store, version, deploy, or roll back prompts with ease.

- Prompt Playground: Compare the performance of prompts across different AI models.

- Simple SDK Integration: Easy setup with 2 lines of code, with support for Python and TypeScript.

- Broad Framework Support: Integrates with popular AI frameworks (CrewAI, DSPy, LlamaIndex, Langchain), LLMs, and VectorDBs.

- Enterprise-Grade Security: SOC2 Type II certified with industry-leading security protocols and encryption.

Use Cases

- Improving AI agent performance and security.

- Monitoring and optimizing token usage and operational costs for LLM applications.

- Tracking and reducing inference latency in AI systems.

- Evaluating and enhancing the accuracy of AI models and agents.

- Managing, versioning, and deploying prompts for AI applications.

- Transitioning AI prototypes to production-ready, enterprise-grade systems.

- Debugging and troubleshooting AI applications through detailed tracing.

Frequently Asked Questions

What’s the best way to improve the performance and security of my AI agents?

You need a combination of observability and evaluations in order to measure the performance and iterate towards better performance and safety with your AI agents. Langtrace is the best platform out there that can help you do this with minimal effort.

What frameworks do you support?

Langtrace supports CrewAI, DSPy, LlamaIndex & Langchain. We also support a wide range of LLM providers and VectorDBs out of the box.

You Might Also Like

Clyde

Contact for PricingOne platform for AI-powered warranties & shipping protection

Loyae

Usage BasedAI-Powered SEO Meta Tag & Alt-Text Generation for WordPress

Moderate

FreeImprove your social media experience and beat the trolls with simple, AI based moderation tools.

Aster Lab

FreemiumLearn anything from YouTube videos

SubscriptionFlow

OtherAI-powered subscription management platform to automate billing, optimize payments, streamline revenue management, and boost retention!