Narrow AI

Take the Engineer out of Prompt Engineering

Description

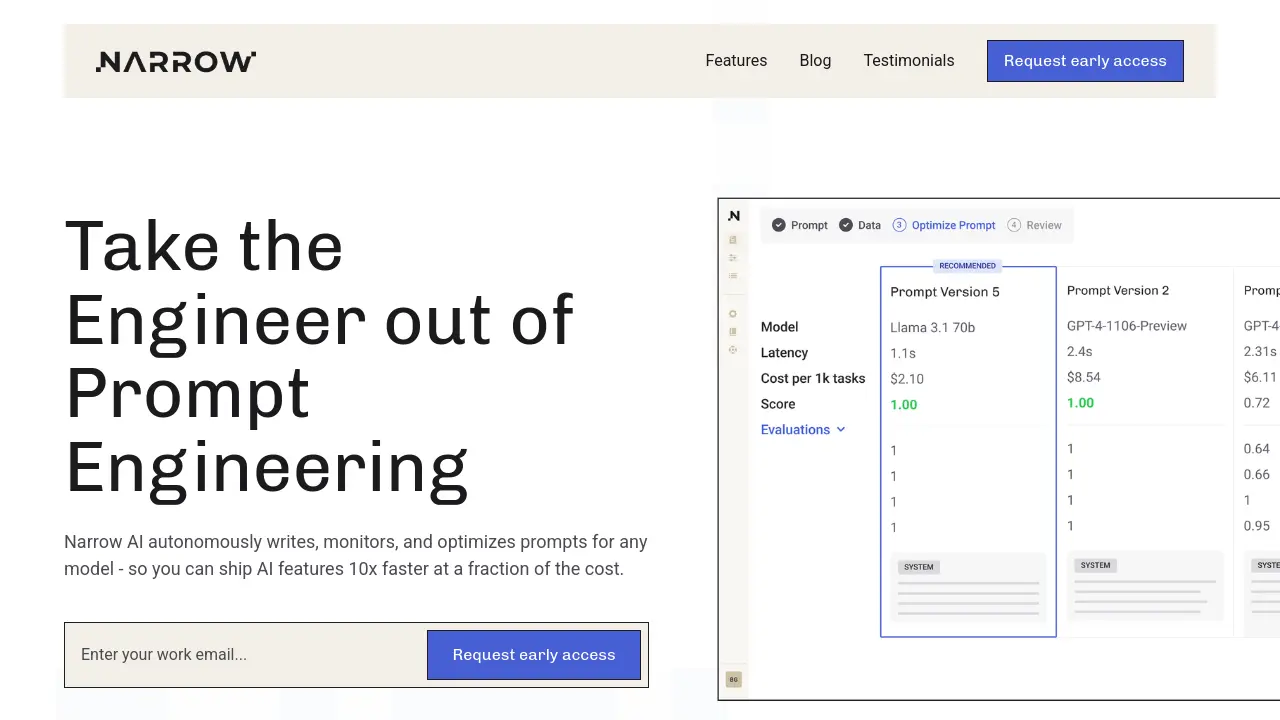

Narrow AI is a platform designed to automate the complexities associated with prompt engineering for large language models (LLMs). It autonomously handles the writing, monitoring, and optimization of prompts, ensuring they perform effectively across various AI models. The system focuses on streamlining the development lifecycle for AI features.

By leveraging Narrow AI, teams can significantly accelerate the shipment of LLM-powered features, reportedly achieving speeds up to 10 times faster than traditional methods. It also aims to drastically reduce operational costs associated with AI model usage by optimizing prompts for efficiency and enabling the use of more economical models. The platform facilitates testing new models quickly, comparing prompt performance across different LLMs, benchmarking cost and latency, and deploying prompts on the optimal model for specific use cases while ensuring adaptability as new models emerge.

Key Features

- Autonomous Prompt Writing: Automatically generate expert-level prompts for any model.

- Automated Prompt Optimization: Continuously optimizes prompts for quality, cost, and speed.

- Cost Reduction: Significantly reduce AI spend (up to 95%) by utilizing cheaper models effectively.

- Model Testing & Comparison: Quickly test and compare prompt performance across various LLMs.

- Performance Benchmarking: Provides cost and latency benchmarks for different models.

- Seamless Model Adaptation: Automatically adapts existing prompts for newly released models.

- Optimized Deployment: Helps deploy prompts on the most suitable model for specific use cases.

- Production Data Curation: Curate datasets for optimization directly from production logs.

- One-Click Prompt Retraining: Retrain prompts for smaller, more efficient models easily.

Use Cases

- Accelerating AI feature development cycles.

- Reducing operational costs for LLM usage.

- Optimizing prompt performance for accuracy and efficiency.

- Comparing and selecting optimal LLMs for specific tasks.

- Migrating prompts seamlessly between different AI models.

- Improving response latency for AI-driven applications.

- Streamlining prompt engineering workflows for development teams.

You Might Also Like

GC AI

Free TrialAI built for in-house legal teams, by legal experts.

Zing Data

FreemiumGenAI business intelligence that works anywhere

Orin

Free TrialTurn Real Estate Equity into Instant Capital

One Publish

FreemiumWrite Once, Publish Everywhere Directly From Notion

Focu

Free TrialThe Mindful Productivity App