Rubra

Open-Weight LLMs Enhanced for Tool-Calling Capabilities

Description

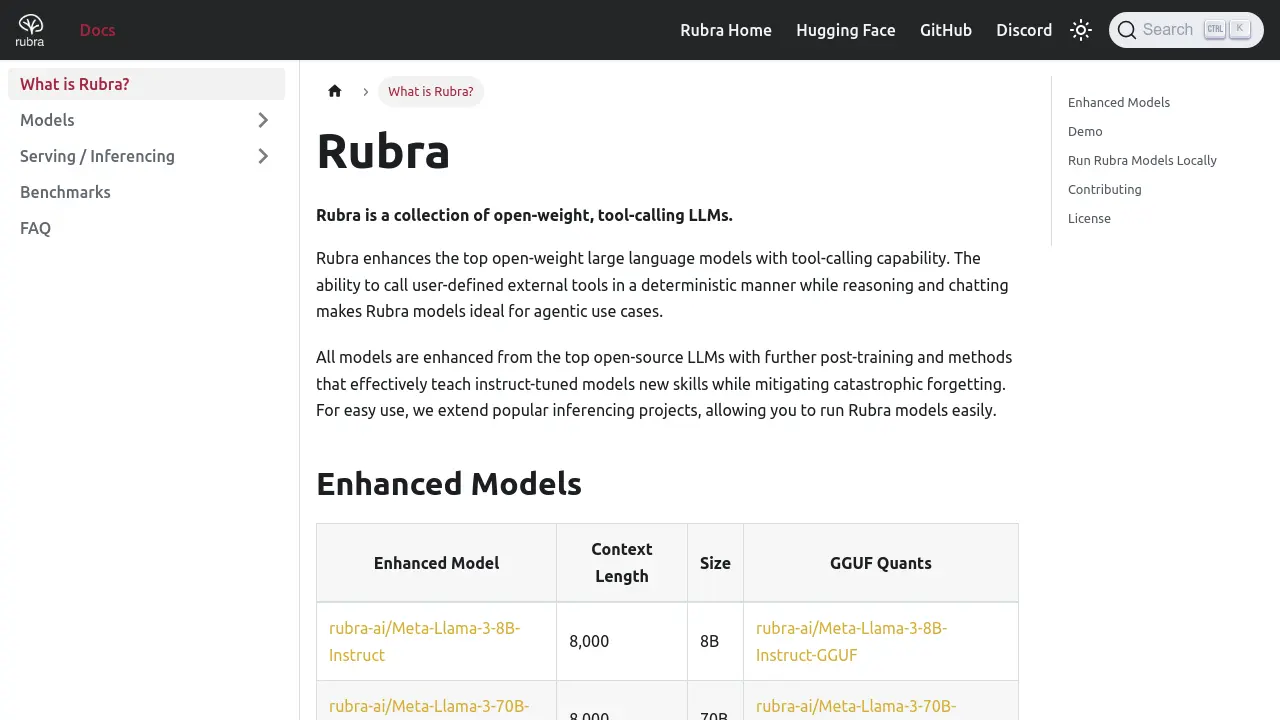

Rubra is a specialized suite of open-weight large language models (LLMs) designed with enhanced tool-calling functionality. It focuses on augmenting leading open-source LLMs, such as Llama 3, Mistral, Gemma, Phi-3, and Qwen2, by integrating the ability to interact deterministically with user-defined external tools. This capability is achieved through advanced post-training techniques specifically developed to impart new skills like tool usage while carefully mitigating the risk of catastrophic forgetting, ensuring the models retain their original reasoning and chat performance.

The primary goal of Rubra is to facilitate the development of sophisticated agentic applications where LLMs need to perform actions or retrieve information from external systems. To ease deployment and experimentation, Rubra provides extensions for popular inferencing libraries like llama.cpp and vLLM, enabling users to run these enhanced models locally. The tool-calling interface is designed to be compatible with the OpenAI format, offering familiarity for developers. Additionally, quantized GGUF versions are available for more resource-constrained environments, and a free demo is accessible via Hugging Face Spaces.

Key Features

- Tool-Calling Enhancement: Adds deterministic tool-calling capabilities to popular open-weight LLMs.

- Open-Weight Model Base: Enhances leading models like Llama 3, Mistral, Gemma, Phi-3, and Qwen2.

- Agentic Use Case Focused: Designed specifically for applications requiring LLMs to interact with external tools.

- Mitigated Catastrophic Forgetting: Employs post-training methods to add skills without degrading existing capabilities.

- Local Inference Support: Extends llama.cpp and vLLM for running models locally.

- OpenAI-Compatible Format: Provides tool-calling in a familiar format for local deployments.

- GGUF Quantization: Offers quantized model versions for efficient resource usage.

- Free Online Demo: Allows quick testing via Hugging Face Spaces without downloads or login.

Use Cases

- Developing AI agents that interact with external APIs or databases.

- Building applications where LLMs perform actions based on user requests.

- Creating chatbots capable of executing external tasks.

- Integrating LLMs with existing software tools and workflows.

- Researching and experimenting with tool-augmented LLMs.

- Running advanced tool-calling models locally for privacy or cost reasons.

You Might Also Like

Voxel

Contact for PricingProtect your workforce. Accelerate operations.

Infiverse HR & Payroll

Contact for PricingEasy-to-Use HR Software to Hire, Engage & Pay Teams Globally

Mochi AI

FreemiumThe Open-Source Video Generation Model

OutfitIdeas

FreemiumAI-Powered Styling for Your Unique Look.

OverScene

Pay OnceA Visual AI For Everything You Do