ModelBench

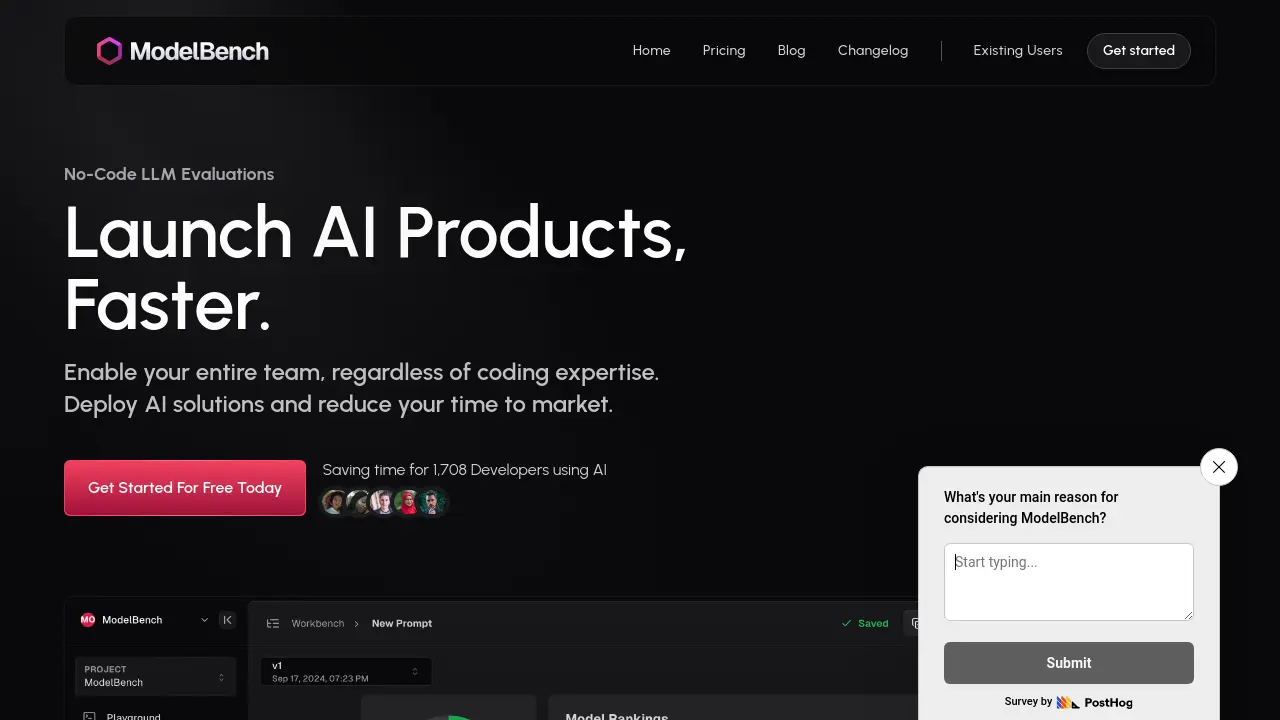

Launch AI Products, Faster.

Description

ModelBench is an AI-powered platform designed to accelerate the launch of AI products. It provides a no-code environment for LLM (Large Language Model) evaluations, enabling entire teams, irrespective of their coding proficiency, to deploy AI solutions and significantly reduce time to market.

The tool offers capabilities for crafting and fine-tuning prompts, seamlessly integrating datasets, and benchmarking prompts across over 180+ LLMs. Users can test with confidence, experiment with numerous scenarios, and iterate rapidly. ModelBench is particularly beneficial for Product Managers, Prompt Engineers, and Developers looking to optimize AI development workflows, compare model responses, and ensure high-quality AI outputs through features like dynamic inputs and LLM run tracing.

Key Features

- No-Code LLM Evaluations: Enable your entire team, regardless of coding expertise, to deploy AI solutions.

- Compare 180+ Models Side-By-Side: Instantly compare responses across hundreds of LLMs and catch quality or moderation issues in minutes.

- Craft Your Perfect Prompt: Design and fine-tune prompts with ease, integrating datasets and tools seamlessly.

- Benchmark with Humans or AI: Use a mixture of AI and human evaluators based on your use case, running multiple rounds in parallel.

- Dynamic Inputs: Import prompt examples from sources like Google Sheets and test them at scale.

- Trace and Replay LLM Runs (Private Beta): Start tracing with no-code and low-code integrations, replay interactions, and detect low-quality responses.

- Test With Confidence: Experiment with countless scenarios without requiring coding or complex frameworks, iterating and improving rapidly.

- Playground Chats: Engage in interactive chats within a playground environment to test and experiment with models.

Use Cases

- Accelerating AI product development cycles.

- Optimizing prompts for various Large Language Models.

- Evaluating and comparing the performance of multiple LLM providers.

- Reducing time-to-market for AI-powered solutions.

- Facilitating collaborative prompt engineering within teams.

- Ensuring AI response quality and identifying moderation issues.

- Streamlining the AI model testing and iteration process.

Frequently Asked Questions

What are credits?

Credits are simple. A response from any model costs 1 credit. Whether it's in a playground chat, or a response from a benchmark being executed - it all costs 1 credit. We make it clear before you run any action what the credit cost is.

Do I need API keys for LLM providers?

We use OpenRouter to bring the 180+ models in ModelBench. Unless you're only using free models in the playground, you'll need to connect your OpenRouter account. This is very straightforward, and new OpenRouter accounts come with a small amount of free credits to get started. In our near term roadmap, we also have the ability to allow you to use provider keys (non-OpenRouter).

How accurate is the AI-based judging?

To "judge our judges", we actually use a panel of experienced LLM developers to evaluate and improve these - which we're going to share in an upcoming blog post. Across 120 different domains and use cases, we found on average the pass or fail result to be 99.4% satisfactory. There are exceptions, which we are working on improving daily. For more complex use cases, team plans allow you to invite guest judges to provide hybrid AI & human-based benchmarking (useful for more creative tasks).

Do credits roll over to the next month?

They do not. We have a duty to ensure our own OpenRouter account is sufficiently rate limited, and in allowing credit rollover, we would put our application at risk of overloading the shared usage of certain application features.

Can I buy more credits?

In order to get more credits, you need to upgrade your plan or add more seats. If you're interested in enterprise pricing, get in touch.

You Might Also Like

sygn.ink

Usage BasedSecure Document Signing Made Simple

chatavocado

PaidYour new secret weapon for super-intuitive customer interactions on WhatsApp

Prompt Wallet

FreeOrganize, Improve and Share Your Best AI Prompts

VeroCloud

Usage BasedCost-Effective AI Cloud Platform for Development & Scaling

Cryptopay

Contact for PricingCrypto payments you can trust